How non-profits really see AI: Expectations, barriers, and readiness

Insights from our survey on where AI creates value, and what’s getting in the way

Most conversations about AI in the charity sector start with tools: “Should we use ChatGPT?” “Do we need a chatbot?” But the real question for non-profits is much simpler: "Where can AI genuinely strengthen our mission without compromising trust, values or human connection?"

To understand how organisations are approaching this, we undertook an AI survey engaging with a wide range of people working in and around charities and the not-for-profit sector. The aim was not to assess tools, but to understand expectations, priorities and readiness across the sector.

Across the group, respondents rated AI’s importance today at roughly 6/10, rising to nearly 9/10 within three years. But their readiness to adopt it sits closer to 5/10, with notable concerns around ethics, data quality and responsible use.

In short: non-profits expect AI to matter more, even if most don’t yet feel fully prepared.

This article explores that gap: where AI can truly make a difference, what’s holding organisations back, and what “AI-readiness” looks like in practice.

Doing more with less: The non-profit reality

Non-profits are operating under increasing pressure. Demand for services is rising, expectations from funders and regulators are growing, yet budgets remain tight and core costs are often underfunded. Digital investment frequently takes a back seat to frontline delivery, leaving many organisations with systems and processes that have evolved over years of “making do.”

In this context, AI is sometimes presented as a quick fix that can automate everything, personalise every message, and unlock instant insight.

But our survey results reflected a more grounded reality. Charities see AI as a potential enabler, particularly for improving data use, fundraising, and operational efficiency, but they are cautious about adopting it without solid foundations, clear governance, or reliable data. There is also concern about choosing tools that don’t fit their needs or create more complexity than value.

As one respondent put it: “The cost of development and implementation will mean we could be left behind.”

Another added an increasingly common consideration: “Concern is the environmental impact in terms of AI use and also the need to get your data sorted first.”

These concerns reflect sector-wide challenges: the environmental footprint of AI, the difficulty of achieving clean and consistent data, the need to ensure sensitive information is handled ethically and responsibly, and the risk that organisations with fewer resources may fall further behind as adoption accelerates.

Where AI really adds value for non-profits

When we asked, “Where do you think AI can bring the most value to non-profits?” the top answers were:

33% – Data analysis and reporting

22% – Operations and administration

15% – Fundraising and donor engagement

15% – Programme delivery and service impact

Charities want AI to help them use their data more effectively, reduce manual workload, and free up time for mission-critical work.

The areas where respondents see the greatest value reflect this focus.

1. Data analysis and reporting: Turning information into insight

The strongest signal from the survey was that charities struggle not with collecting data, but with making sense of it. Programme outcomes, CRM records, surveys, case notes, digital services, the volume keeps growing, but capacity does not.

AI can help by:

- identifying patterns across messy or incomplete datasets

- automating recurring reports for boards and funders

- consolidating qualitative and quantitative data into clearer formats

- flagging early indicators of risk or change

AI doesn’t replace strategic judgement, but it enables teams to get to clarity faster, which is something many charities struggle to achieve with existing capacity.

AI can help charities become far more efficient and insight-driven, ensuring donor money is spent smarter and improving impact and transparency.

2. Operations and administration: reducing friction and freeing capacity

Alongside the challenge of interpreting data, many charities face a different kind of pressure: operational tasks that absorb disproportionate amounts of time. Manual processes, siloed systems, and inconsistent documentation create friction that slows teams down.

AI can make an immediate difference by automating high-volume, low-value tasks such as data entry, document processing, summarising case notes, handling internal queries, or supporting volunteer coordination.

For many organisations, even small efficiency gains here can have an outsized impact on capacity.

3. Fundraising and donor engagement: Amplifying, not replacing, human relationships

Fundraising is one of the most resource-pressured areas of the sector – heavy on data, reliant on personal connection, and critical for organisational sustainability. Respondents see AI as a way to enhance this work, not replace it.

Based on survey responses, common practical applications include:

- segmenting donors based on real behavioural patterns

- identifying likely upgraders or at-risk supporters

- prioritising stewardship based on past engagement

- drafting initial versions of campaign content

Used well, AI doesn’t depersonalise fundraising. It strengthens it by reducing administrative load and helping fundraisers understand each donor more clearly, enabling deeper, more tailored relationships instead of generic, one-size-fits-all interactions.

What’s really getting in the way

If the opportunities are so clear, why isn’t AI already embedded across the sector?

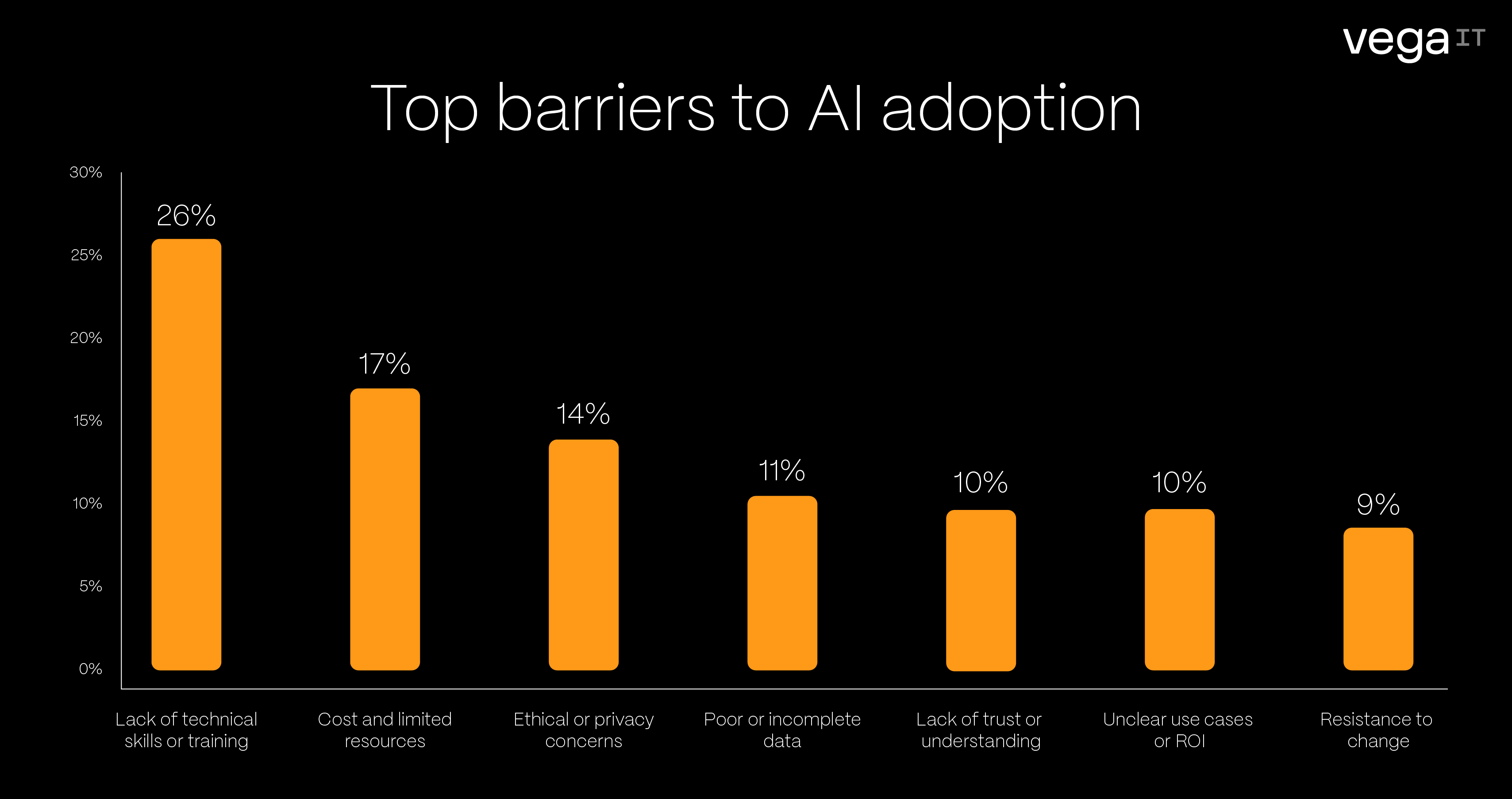

Our survey highlighted several obstacles, but three stood out:

26% - Lack of technical skills or training

17% - Cost and limited resources

14% - Ethical or privacy concerns

Other common worries included poor or incomplete data, lack of trust or understanding of AI, unclear ROI, and resistance to change.

Let’s unpack a few of these.

Skills and capacity

Most non-profits simply don’t have in-house data scientists, machine learning specialists, or dedicated AI roles. Digital and IT teams, where they exist, are often small, generalist, and operating at full stretch. This means AI can feel like “one more thing” to understand and manage, competing with safeguarding requirements, fundraising pressures, compliance work, and service delivery.

The barrier isn’t just a lack of technical expertise, it’s the absence of time, confidence, and structured training to explore AI safely and meaningfully. Several respondents emphasised that capability building matters as much as the technology itself.

As one put it: [An AI-ready charity is] “a charity that has clean data and a good training team in place.”

And, echoing that point, Matt Collins from FatBeehive noted:

“An AI-ready charity is one that feels confident using clear, well-organised content and safe data practices so AI can genuinely help its team, supporters and community.”

AI adoption in charities is less about technical ambition and more about organisational readiness.

Data readiness

“An AI-ready charity is one with all the foundational systems in place, holding robust data and operating smoothly with process and procedures in a compliant way”

Many respondents echoed this point: data readiness is one of the biggest determinants of whether AI can deliver real value. Years of operating with limited resources often leave information scattered across systems, spreadsheets, emails and legacy databases. The issue isn’t a lack of data, it’s that it’s rarely consistent, connected or reliable enough to support automation or meaningful insight.

This came through strongly in our survey, where “poor or incomplete data” and “lack of trust or understanding” were cited as major blockers.

AI cannot simply be added on top of fragmented processes. Without structure and standards, outputs become unreliable and risks increase, especially when handling sensitive information.

The good news is that strong data foundations don’t require a major overhaul. They come from small, steady improvements: consistent data entry, clear ownership, standardised definitions, systems that talk to each other, and processes designed with reporting in mind.

Ethics, trust, and responsibility

Respondents raised questions around fairness, transparency, and data protection, especially where AI might influence decisions or automate interactions. As Duchenne UK put it:

“A concern would be ensuring that AI technologies are used ethically and responsibly, particularly when dealing with sensitive patient data. There’s a need for robust data protection measures, transparency, and ensuring that AI models are not biased and are fair in their application.”

Others emphasised the need for clarity and restraint: “It must be done responsibly.”

And for some, the concern was relational rather than technical: “AI seamlessly supporting organisational strategy without sterilising the human touch.”

These aren’t barriers to innovation; they’re reminders that AI in charities must be held to a high ethical standard. That doesn’t require complex frameworks, just clear principles:

- what AI should and should not be used for

- transparency about data

- human oversight where decisions affect people

- alignment with organisational values

- consideration of environmental impact

A concern would be ensuring that AI technologies are used ethically and responsibly, particularly when dealing with sensitive patient data. There’s a need for robust data protection measures, transparency, and ensuring that AI models are not biased and are fair in their application.

Cost and the fear of “wasted innovation”

Cost was another significant barrier highlighted in the survey, not just the price of AI tools themselves, but the wider investment required to make them work: integration, training, governance, and ongoing support. For many charities, these hidden costs matter more than the tools themselves.

The concern isn’t simply affordability. It’s the fear of committing scarce resources to technology that doesn’t deliver meaningful improvement. One respondent captured this anxiety clearly:

“The cost of development and implementation will mean we could be left behind.”

The risk runs in both directions:

- moving too slowly and missing opportunities to increase impact and efficiency

- or moving too fast and investing in solutions that add complexity without delivering real value

This is why a mission-first approach matters. AI should be evaluated based on clear, tangible outcomes such as stronger services, more effective fundraising, improved workflows, rather than a sense of urgency or technological pressure.

What an “AI-ready” charity really looks like

At the end of our survey, we asked: “What does an ‘AI-ready’ charity look like to you?”

Despite different contexts, the responses pointed to the same idea: readiness is about foundations, not sophistication.

“Data confident, open to innovation, and focused on using technology to enhance human connection.” – Norwood

“Clean data and a good training team in place.” – Relatives for Justice

“Clear policies for defined usage.” – Buglife

Across all answers, five themes stood out:

- Clarity of purpose – AI is used intentionally, in service of the mission.

- Solid foundations – reliable data, clear processes, and systems that work together.

- People and skills – staff feel supported and trained enough to experiment safely.

- Governance and ethics – policies define where AI is appropriate and how data is handled.

- Human-centred design – AI enhances relationships and communication, it doesn’t replace them.

No charity needs all of this before getting started. But these principles offer a practical way to assess readiness and plan next steps.

A practical path forward: AI for mission, not for show

So where do you start if you see the potential of AI but feel the weight of the challenges?

A practical approach is to begin small, stay focused on mission, and build the foundations as you go.

1. Start small, with real problems – Identify one or two areas where AI could relieve pressure or unlock insight:

- a monthly report that takes days to prepare

- unstructured data you can’t use

- repetitive admin that drains capacity

- simple service user questions that need quick answers

Define success in clear, mission-aligned terms: faster response times, less admin, better insight, improved experience, and build a small pilot around that.

2. Build a data-aware culture – AI readiness doesn’t begin with advanced tooling. It begins with consistent, usable data.

Small steps make a big difference:

- standardise how key information is captured

- clean up one important dataset at a time

- make sure people understand why data quality matters

This isn’t a transformation project, it’s a gradual shift toward being data confident.

3. Work with partners who understand context – Several respondents warned about one-size-fits-all solutions. The right technical partner should:

- respect your safeguarding, ethics, and accessibility requirements

- work with your existing systems

- measure success in terms of impact, not deployment

AI works best when it complements the organisation, not when it forces it into a new shape.

4. Make ethics part of the design, not an afterthought – Instead of pausing innovation because of ethical concerns, bring them into the process early:

- decide what should never be automated

- ensure human oversight where decisions affect people

- be transparent about how AI is used

- consider environmental impact when choosing tools

This doesn’t require a long policy document, just clear principles people can act on.

The bottom line: AI as an enabler, not the main character

One message came through clearly in our survey: AI is most valuable when it acts as an enabler, creating space, clarity and capacity, so teams can focus on the work that requires human judgement, empathy and trust.

So instead of asking “Are we ready for AI?”, a more useful starting point is:

“Where could a bit of intelligence and automation help us serve people better?”

Answer that honestly, and the path forward becomes much clearer.